This document describes technical context and analysis to support a proposal for development of a new Linux device driver destined for mainline Linux and use by OpenXT, to introduce Hypervisor-Mediated data eXchange (HMX) into the data transport of the popular VirtIO suite of Linux virtual device drivers, by leveraging Argo in Xen. Daniel Smith proposed this idea, which has been supported in discussions by Christopher Clark and Rich Persaud of MBO/BAE Systems, and Eric Chanudet and Nick Krasnoff of AIS. Christopher is the primary author of this version of this document.

– August 2020

VirtIO is a virtual device driver standard developed originally for the Linux kernel, drawing upon the lessons learned during the development of paravirtualized device drivers for Xen, KVM and other hypervisors. It aimed to become a “de-facto standard for virtual I/O devices”, and to some extent has succeeded in doing so. VirtIO is now widely implemented in both software and hardware, it is commonly the first choice for virtual driver implementation in new virtualization technologies, and the specification is now maintained under governance of the OASIS open standards organization.

VirtIO’s system architecture abstracts device-specific and device-class-specific interfaces and functionality from the transport mechanisms that move data and issue notifications within the kernel and across virtual machine boundaries. It is attractive to developers seeking to implement new drivers for a virtual device because VirtIO provides documented specified interfaces with a well-designed, efficient and maintained common core implementation that can be leveraged to significantly reduce the amount of work required for a new virtual device driver.

VirtIO follows the Xen PV driver model of split-device drivers, where a front-end device driver runs within the guest virtual machine to provide the device abstraction to the guest kernel, and a back-end driver runs outside the VM, in platform-provided software - eg. within a QEMU device emulator - to communicate with the front-end driver and provide mediated access to physical device resources.

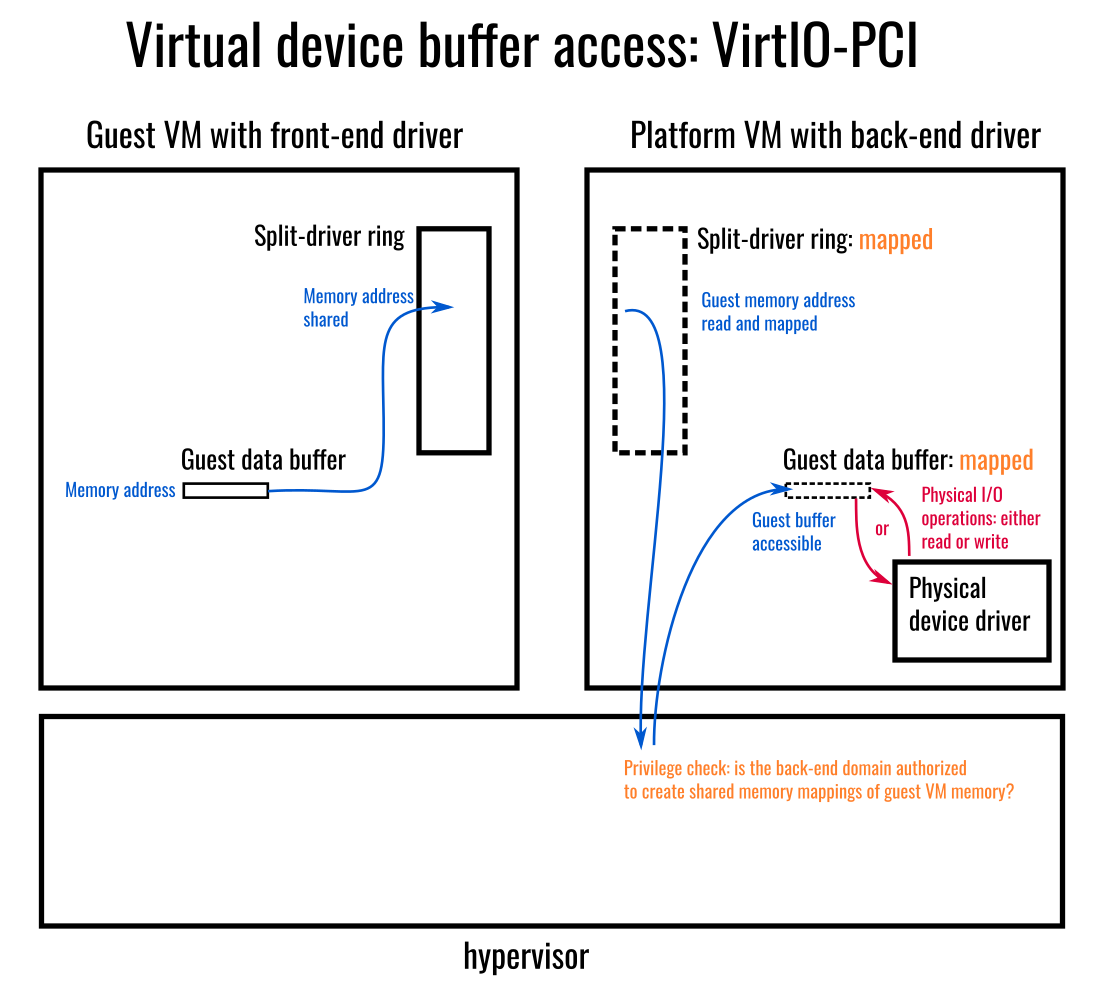

A critical property of the current common VirtIO implementations is that they prevent enforcement of strong isolation between the front-end and back-end virtual machines, since the back-end VirtIO device driver is required to be able to obtain direct access to the memory owned by the virtual machine running the front-end virtio device driver. ie. The VM hosting the back-end driver has significant privilege over any VM running a front-end driver.

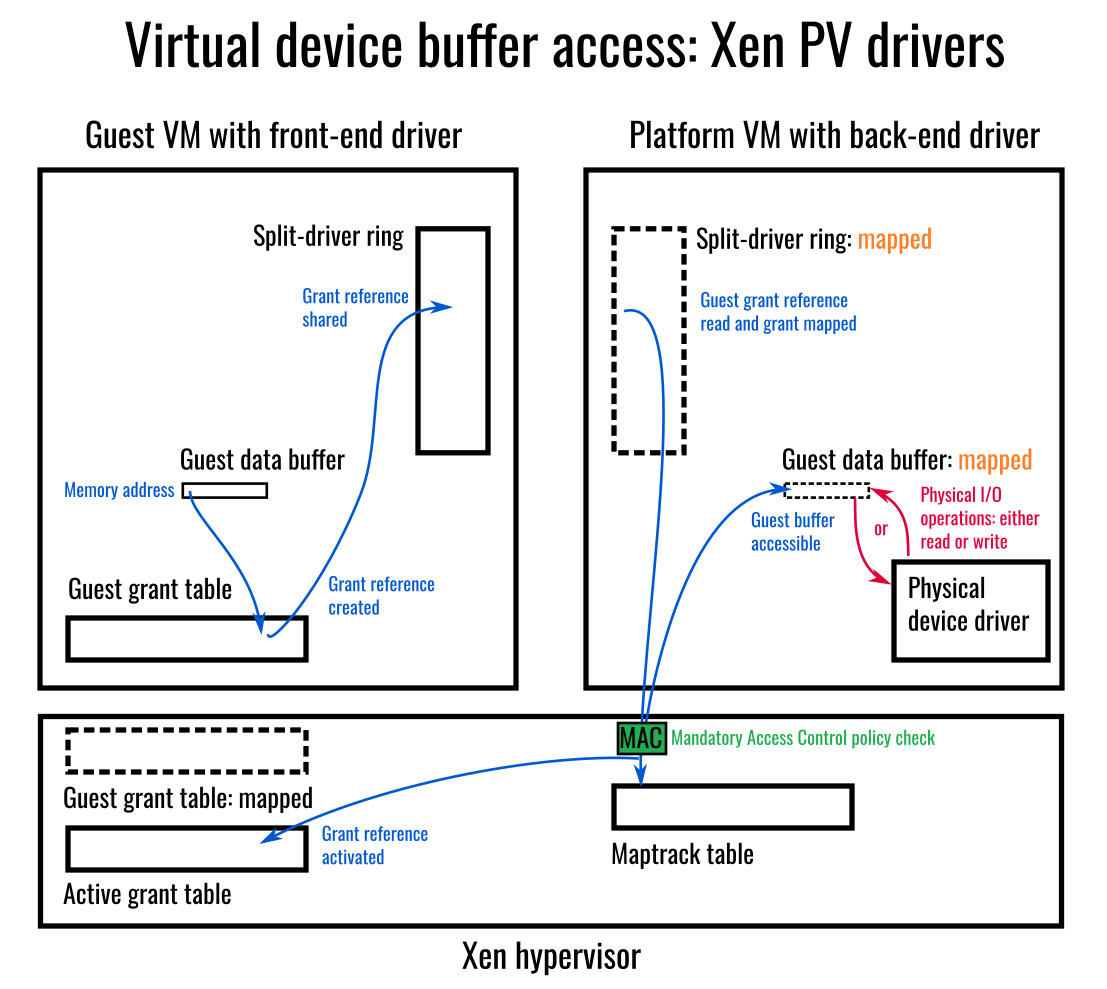

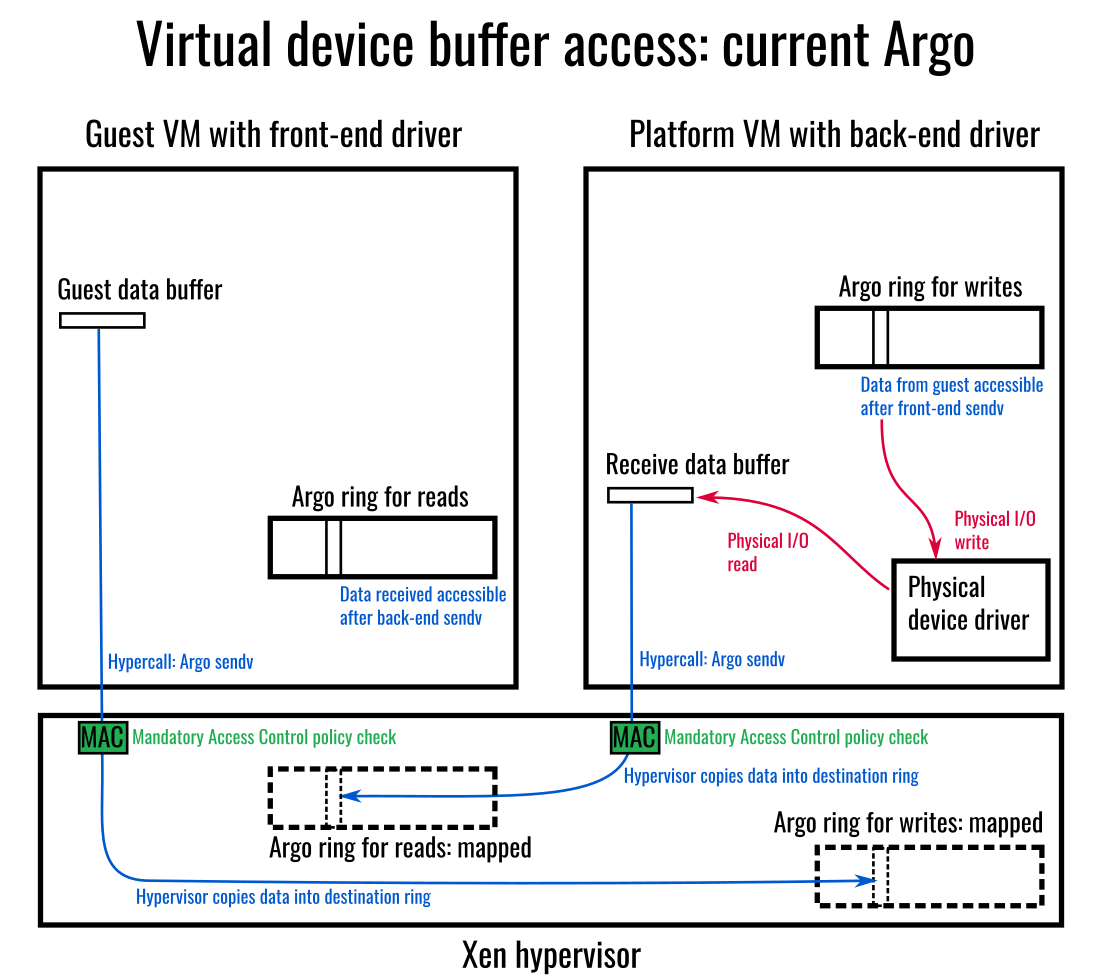

Xen’s PV drivers use the grant-table mechanism to confine shared memory access to specific memory pages used and permission to access those are specifically granted by the driver in the VM that owns the memory. Argo goes further and achieves stronger isolation than this since it requires no memory sharing between communicating virtual machines.

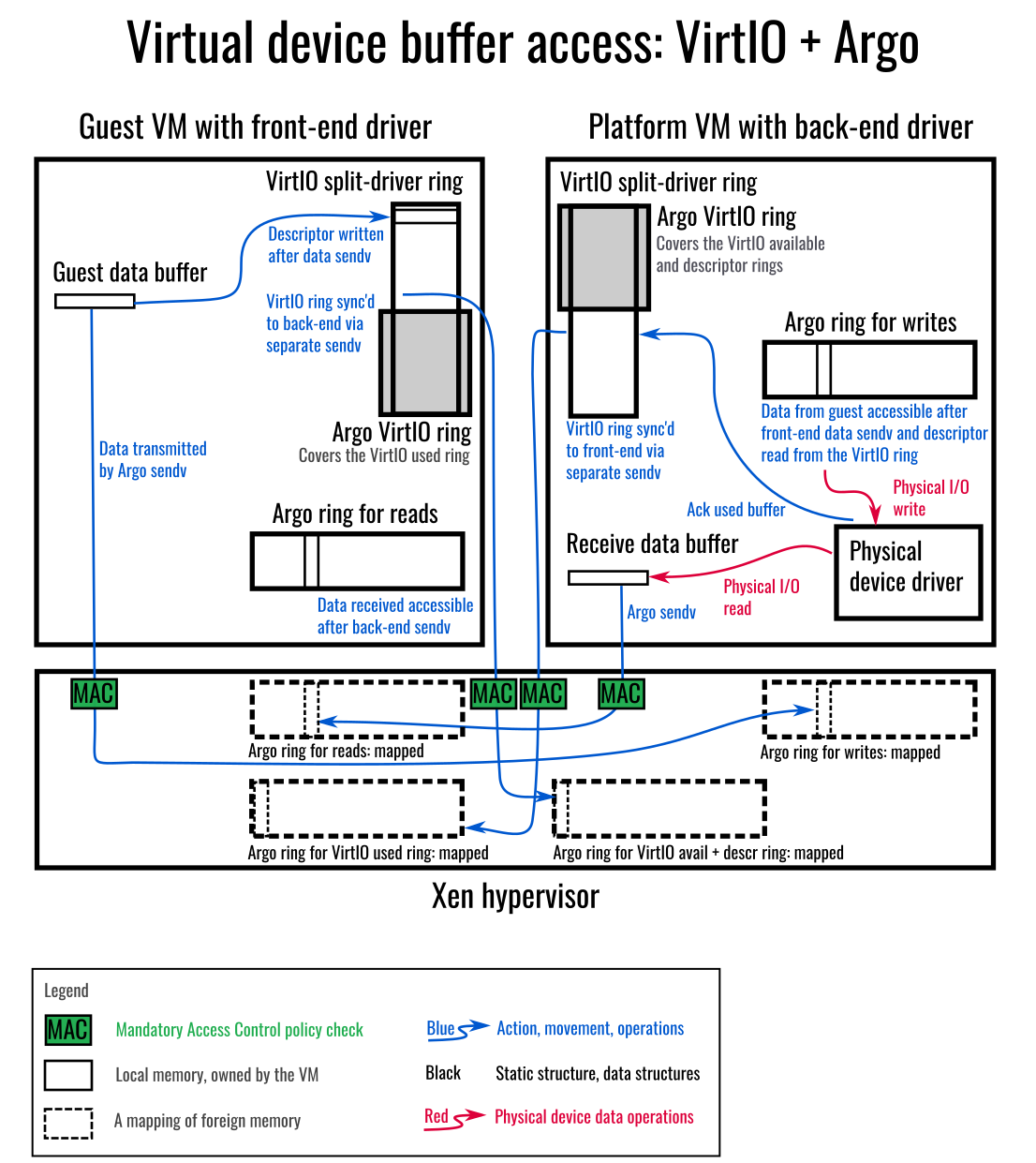

In contrast to Xen’s current driver transport options, the current implementations of virtio transports pass memory addresses directly across the VM boundary, under the assumption of shared memory access, and thereby require the back-end to have sufficient privilege to directly access any memory that the front-end driver refers to. This has presented a challenge for the suitability of using virtio drivers for Xen deployments where isolation is a requirement. Fortunately, a path exists for integration of the Argo transport into VirtIO which can address this and enable use of the existing body of virtio device drivers with isolation maintained and mandatory access control enforced: consequently this system architecture is significantly differentiated from other options for virtual devices.

In addition to the front-end / back-end split device driver model, there are further standard elements of VirtIO system architecture.

For detailed reference, VirtIO is described in detail in the “VirtIO 1.1 specification” OASIS standards document.

The front-end device driver architecture imposes tighter constraints on implementation direction, and so is more important to understand in detail, since it is this that is already implemented in the wide body of existing VirtIO device drivers that we are aiming to enable use of. The back-end software is implemented in the platform-provided software - ie. the hypervisor, toolstack, a platform-provided VM or a device emulator, etc. - where we have more flexibility in implementation options, and the interface is determined by both the host virtualization platform and the new transport driver that we are intending to create.

There are multiple classes of virtio device driver within the Linux kernel; these include the general class of front-end virtio device drivers, which provide function-specific logic to implement virtual devices - eg. a virtual block device driver for storage - and the transport virtio device drivers, which are responsible for device discovery with the platform and provision of data transport across the VM boundary between the front-end drivers and the corresponding remote back-end driver running outside the virtual machine.

There are several implementations of virtio transport device drivers in Linux, each implement a common interface within the kernel, and they are designed to be interchangeable and compatible with the VirtIO front-end drivers: so the same front-end driver can use different transports on different systems. Transports can coexist: different virtual devices can be using different transports within the same virtual machine at the same time.

The back-end domain requires sufficient privilege with the hypervisor to be able to map the memory of any buffers used for I/O with the device by the guest VM.

Enabling Virtio to use the Argo interdomain communication mechanism for data transport across the VM boundary will address three critical requirements:

Preserve strong isolation between the two ends of the split device driver

ie. remove the need for any shared memory between domains or any privilege to map the memory belonging to the other domain

Enable enforcement of granular mandatory access control policy over the communicating endpoints

ie. Use Xen’s XSM/Flask existing control over Argo communication, and leverage new Argo MAC capabilities as they are introduced to govern Virtio devices

Enable Argo support in the mainline Linux kernel

The VirtIO-Argo transport device driver will be a smaller, simpler device driver than the existing general Argo Linux driver. It should not need to expose functionality to userspace directly, and should be simpler to develop for inclusion in the mainline Linux kernel, while still enabling Argo to be used for driver front-ends.

ie. It avoids many challenges with design, implementation and upstreaming of the existing Argo Linux device driver into the Linux kernel.

The proposal is to implement a new VirtIO transport driver for Linux that utilizes Argo. It will be used within guest virtual machines, and be compatible with the existing VirtIO front-end device drivers. It will be paired with a corresponding new VirtIO-Argo back-end to run within the Qemu device emulator, in the same fashion as the existing VirtIO transport back-ends, and the back-end will use libargo and the (non-VirtIO) Argo Linux driver.

VirtIO device drivers are included in the mainline Linux kernel and enabled in most modern Linux distributions. There is a menu for VirtIO drivers in the kernel Kconfig to enable inclusion of drivers as required. Once the VirtIO-Argo transport driver has been reviewed upstream and accepted into the mainline Linux kernel, it should propagate for inclusion in the Linux distributions, which will enable seamless deployment of guest VMs on VirtIO-Argo hypervisor platforms with no futher in-guest drivers required.

Prior to the VirtIO-Argo device driver being made available via the Linux distributions, installation will require a Linux kernel module to be installed for the VirtIO-Argo driver, which will then enable compatibility with the other existing VirtIO device drivers in the guest. A method of switching devices over from using their prior driver to the newly activated VirtIO-Argo driver will need to be designed; this is the same issue that the existing Xen PV drivers have handled when handing off responsibility from running on emulated devices over to the Xen PV driver.

Open Source VirtIO drivers for Windows are available, with some Linux distributions, eg. Ubuntu and Fedora, including WHQL Certified drivers. These enable Windows guest VMs to run with virtual devices provided by the VirtIO backends in QEMU. It has not yet been ascertained whether the Windows VirtIO implementation is suitable for introduction of a VirtIO-Argo transport driver in the same way as proposed here for Linux.

The QEMU device emulator implements the Virtio transport that the front-end will connect to. Current QEMU 5.0 implements both the virtio-pci and virtio-mmio common transports.

For QEMU to be able to use Argo, it will need an Argo Linux kernel device driver, with similar functionality to the existing Argo Linux driver.

The toolstack of the hypervisor is responsible for configuring and establishing the back-end devices according to the virtual machine configuration. It will need to be aware of the VirtIO-Argo transport and initialize the back-ends for each VM with a suitable configuration for it.

The DPDK userspace device driver software also implements an alternative set of VirtIO virtual device back-ends to QEMU, which can also be used to support VMs with VirtIO virtual devices. Please note that the DPDK implementation uses a “packed virtqueue” data structure, as opposite to the default original “split virtqueue”, across the VM boundary and this is not targetted for compatibility in the initial version of the VirtIO-Argo transport.

Hardware implementations of VirtIO interfaces will by unaffected by the VirtIO-Argo system.

OpenXT currently uses the Xen PV drivers for virtual devices.

The Xen PV drivers use the Grant Tables for the front-end domain to grant access to the back-end domain to be able to establish shared memory access to specific buffers for physical I/O device operations.

In addition to the Xen PV drivers, OpenXT also uses Argo, with an Argo Linux device driver, for inter-domain communication. In contrast to the grant-table data path used by the Xen PV drivers, which establishes and tears down shared memory regions between communicating VMs, the Argo data path is hypervisor-mediated, with Mandatory Access Control enforced on every data movement operation.

The challenges with using Argo with its own Linux device driver installed in the guest are:

The Argo Linux driver is not currently suitable for upstreaming to mainline Linux.

A development plan exists for improvement but it requires significant effort and resourcing it has proven challenging.

To produce an Argo-transport driver of each virtual device driver class, such as networking, storage, etc., suitable for replacing the existing Xen PV drivers, is work that would be specific to only the Xen software ecosystem.

Not all Xen-based projects would be likely to switch virtual driver implementation, given the different characteristics and requirements of cloud platforms, client platforms and embedded systems, and the existing investment in the Xen PV drivers software

eg. see the recent automotive community development of PV audio for in-vehicle entertainment systems.

This stands in contrast to the VirtIO network and storage virtual device drivers that can be used unchanged on a number of different hypervisors.

Adding Argo as a transport for VirtIO will retain Argo’s MAC policy checks on all data movement, while allowing use of the VirtIO virtual device drivers and device implementations.

With the VirtIO virtual device drivers using the VirtIO-Argo transport driver, OpenXT can retire use of the Xen PV drivers within platform VMs and guest workloads. This removes shared memory from the data path of the device drivers, allows for some hypervisor functionality, such as the grant tables, to be disabled, and makes the virtual device driver data path HMX-compliant.

In addition, as new virtual device classes in Linux have VirtIO drivers implemented, these should transparently be enabled with Mandatory Access Control, via the existing virtio-argo transport driver, potentially without further effort required – although please note that for some cases (eg. graphics) optimizing performance characteristics may require additional effort.

Design of the ACPI-table-based virtual device discovery mechanism for the VirtIO-Argo transport

Research and design of the Argo-virtio backend transport architecture

Toolstack

QEMU

Review XSM/Flask policy control points in Argo for the split-driver use case

xenstore interaction, if any

An Argo specification document

Required for supporting embedded system evaluations

Repeatedly requested by interested parties in the Xen ecosystem who work with safety requirements.

This will be appropriate for inclusion in the Xen Project documentation

A performance evaluation of Argo

Report required to support external evaluations of Argo

Question has been raised in every forum where Argo has been presented

Needs to cover Intel, AMD and Arm platforms

Variation in processor cache architectures and memory consistency models likely to have appreciable effects

Development to improve performance based on measurements

Notification delivery is a known area where improvement should be possible

See the first series of Argo posted during Xen upstreaming for a non-event based interrupt delivery path

Split-driver use case is a priority

bandwidth important for bulk data transport

latency important for interactive and media-delivery (eg. IVI systems)

The VirtIO 1.1 specification, OASIS

https://docs.oasis-open.org/virtio/virtio/v1.1/csprd01/virtio-v1.1-csprd01.html

virtio: Towards a De-Facto Standard For Virtual I/O Devices

Rusty Russell, IBM OzLabs; ACM SIGOPS Operating Systems Review, 2008.

https://ozlabs.org/~rusty/virtio-spec/virtio-paper.pdf

Xen and the Art of Virtualization

Paul Barham, Boris Dragovic, Keir Fraser, Steven Hand, Tim Harris, Alex Ho, Rolf Neugebauer, Ian Pratt, Andrew Warfield; ACM Symposium on Operating System Principles, 2003

https://www.cl.cam.ac.uk/research/srg/netos/papers/2003-xensosp.pdf

Project ACRN: 1.0 chose to implement virtio

https://projectacrn.org/acrn-project-releases-version-1-0/

Solo5 unikernel runtime: chose to implement virtio

https://mirage.io/blog/introducing-solo5

Windows VirtIO drivers

https://www.linux-kvm.org/page/WindowsGuestDrivers/Download_Drivers

https://github.com/virtio-win/kvm-guest-drivers-windows

Attendees:

Christopher Clark, Rich Persaud; MBO/BAE

Daniel Smith; Apertus Solutions

Eric Chanudet, Nick Krasnoff; AIS Boston

Actions:

Christopher to produce a draft plan, post to Wire, discuss and then

put it onto a private page on the OpenXT confluence wiki

Christopher and Eric to commence building a prototype as funding permits,

with Daniel providing design input and review

Background: the Virtio implementation in the Linux kernel is stacked:

there are many virtio drivers for different virtual device functions

each virtio driver uses a common in-kernel virtio transport API,

and there are multiple alternative transport implementations

transport negociation proceeds, then driver-level negociation

to establish the frontend-backend communication for each driver

KVM uses Qemu to provide backend implementations for devices.

To implement Argo as a Virtio transport, will need to make Qemu Argo-aware.

Typically a PCI device is emulated by Qemu, on a bus provided to the guest.

The Bus:Device:Function (bdf) triple maps to Qemu; the guest loads the PCI

virio transport when this PCI device with that bdf is detected.

For Xen, this is OK for HVM guests, but since the platform PCI device is not

exposed to PVH (or PV) guests, not a sufficient method for those cases.

The existing main Xen PCI code is small, used for discovery.

vPCI is experimental full emulation of PCI within Xen -- Daniel does not

favour this direction.

Paul Durrant has a draft VFIO implementation plan, previously circulated.

Paul is working on emulating PCI devices directly via a IOReq server (see: "demu").

Daniel is interested in the Linux kernel packet mmap, to avoid one world-switch

when processing data.

Christopher raised the Cambridge Argo port-connection design for external

connection of Argo ports between VMs.

Eric raised the vsock-argo implementation; discussion mentioned the Argo development wiki page that describes requirements and plan that has previously been discussed and agreed for future Argo Linux driver development, which needs to include a non-vsock interface for administrative actions such as runtime firewall configuration.

Daniel is positively inclined towards using ACPI tables for discovery

and notes the executable ACPI table feature, via AML.

Naming: an Argo transport for virtio in the Linux kernel would, to match the

existing naming scheme, be: virtio-argo.c

Group inclined not to require XenStore.

Relevant to dom0less systems where XenStore is not necessary present.

Don't want to introduce another dependency on it.

uXen demonstrates that it is not a requirement.

XenStore is a performance bottleneck (see NoXS work), bad interface, etc.

Argo (domain identifier + port) tuple is needed for each end

Note: the toolstack knows about both the front and backend and could

be able to connect the two together.

Note: "dom0less" case is important: no control domain or toolstack

executing concurrently to perform any connection handshake.

DomB should be able to provide configuration information to each domain

and have connections succeed as the drivers in the different domains

connect to each other.

domain reboot plan in that case: to be considered

Note: options: frontend can:

use Argo wildcards, or

know which domain+port to connect to the backend

or be preconfigured by some external entity to enable the connection

(with Argo modified to enable this, per Cambridge plan)

Note: ability to restart a backend (potentially to a different domid)

is valuable

Note: Qemu is already used as a single-purpose backend driver for storage.

ref: use of Qemu in Xen-on-ARM (PVH architecture, not HVM device emulator)

ref: Qemu presence in the xencommons init scripts

ref: qcow filesystem driver

ref: XenServer storage?

Options:

PCI device, with emulation provided by Xen

ACPI tables

dynamic content ok: cite existing CPU hotplug behaviour

executable content ok: AML

External connection of Argo ports, performed by a toolstack

ref: Cambridge Argo design discussion, Q4 2019

Use a wildcard ring on the frontend side for driver to autoconnect

can scope reachability via the Argo firewall (XSM or nextgen impl)

Note:

ACPI plan is good for compatibility with DomB work, where ACPI tables are

already being populated to enable PVH guest launch.

ACPI table -> indicates the Argo tuple(s) for a guest to register

virtio-argo transport

note: if multiple driver domains, will need (at least) one transport

per driver domain

toolstack passes the backend configuration info to the qemu (or demu) instance

that is runnning the backend driver

qemu: has an added argo backend for virtio

the backend connects to the frontend to negociate transport

For a first cut: just claim a predefined port and use that to avoid the need

for interacting with an ACPI table.

an early basic virtio transport over Argo as a proof of viability

describe compatibility of the plan wrt to the Cambridge Argo design

discussion which covered Argo handling communication across nested

virtualization.

how could a new CPU VMFUNC assist Argo?

aim: to obviate or mitigate the need for VMEXITs in the data path.

look at v4v's careful use of interrupts (rather than events) for

delivery of notifications: should be able reduce the number of Argo VMEXITS.

-> see the Bromium uxen v4v driver and the first-posted round of the

Argo upstreaming series.

Copyright (c) 2020 BAE Systems.

Document author: Christopher Clark.

This work is licensed under the Creative Commons Attribution Share-Alike 4.0 International License.

To view a copy of this license, visit https://creativecommons.org/licenses/by-sa/4.0/.